Retry data load via ProcessingHub Manager

Most people that have worked with Salesforce as an admin or power user know Salesforce Dataloader. When designing Dataload Retry Management our goal was to implement a similar experience, as outlined in the following table.

| Salesforce Dataloader | ProcessingHub Dataload Retry Management |

|---|---|

| CSV files to load | We prepare every data load in a staging table. Every row in the CSV file of the Dataloader is a staged table row in ProcessingHub. |

| Success and error files | For every record that we try to push to Salesforce, we track and store if that was successful or not. |

| Error column in error csv file | We store the same error message in the staging table. |

| Retry to uploading error CSV file | ProcessingHub automatically retries if there is a locked row error; there are also manual retry option “Retry All”, “Retry Batch” and “Retry 1st Failed Record.” |

| When there are no more errors, the admin proceeds to next task | Once all staged records are successfully pushed, the process continues. |

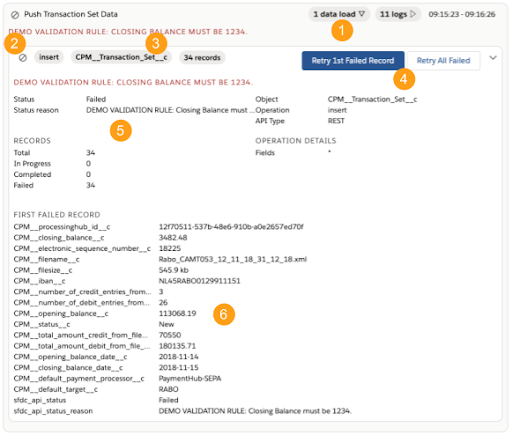

Structure

- Open dataload details and retry functionality by clicking on the dataload badge.

- Dataload status. See section on dataload status for details.

- Key dataload information: operation, object and number of records.

- Retry buttons.

- Retry 1st failed record

- If API Type is bulk, retry first batch

- Retry all failed records

- More detailed dataload information, including record counts.

- If there are failed records, all the data for the first failed record is shown. When the “Retry 1st Failed Record” is clicked, this record is retried.

Recommendations for rety workflow

Assess if the error is record related or batch related. It will almost always be record related, but if the error has to do with locked rows or the batch size being to big it’s batch related. If it’s record related:

- Analyse the error and fields in the first failed record.

- Solve the root cause in Salesforce, for example, by removing a validation rule.

- Retry 1st failed record.

- If the dataload is still fails, analyse if you have at least solved that first failed record. If not, re-assess root cause. If yes, solve the next issue.

- If you confident the full root cause has been resolved, use the “Retry All Failed” to retry all records at once.

If it is batch related:

- Navigate to Setup > ProcessingHub > General Settings

- Tweak Bulk API settings and try again using the “Retry 1st Batch”.

- If batch size is too big, try a lower batch size.

- If there are locked row errors, set concurrency mode to Serial.

If a locked row error occurs when ProcessingHub is pushing data to Salesforce, ProcessingHub automatically retries pushing the data. The auto retry is attempted up to five times at pre-determined intervals. If after five attempts the locked row error persists, please follow the steps above to identify the root cause before manually retyring.